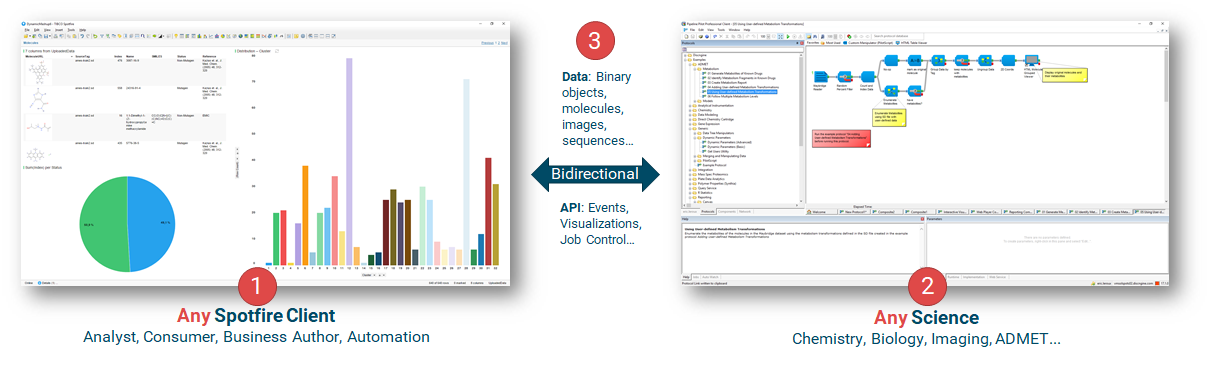

- the Client Automation: interactively build TIBCO Spotfire® documents based on user input,

- the Pipeline Pilot Data Functions: bi-directional data exchange between TIBCO Spotfire® and Pipeline Pilot,

- the Pipeline Pilot Automation Tasks: server-side TIBCO Spotfire® document authoring from Pipeline Pilot.

For those familiar with the Discngine Connector 4.1, this version 5.x has been completely redesigned in order to be compatible with TIBCO Spotfire® Analyst and TIBCO Spotfire® Web Player. Thus, the same Pipeline Pilot protocol can be executed either in the Analyst or in the Web Player. The Discngine Connector 5+ is backward compatible with the Discngine Connector 4.1, but the Pipeline Pilot components are completely different and the protocols need to be redeveloped.

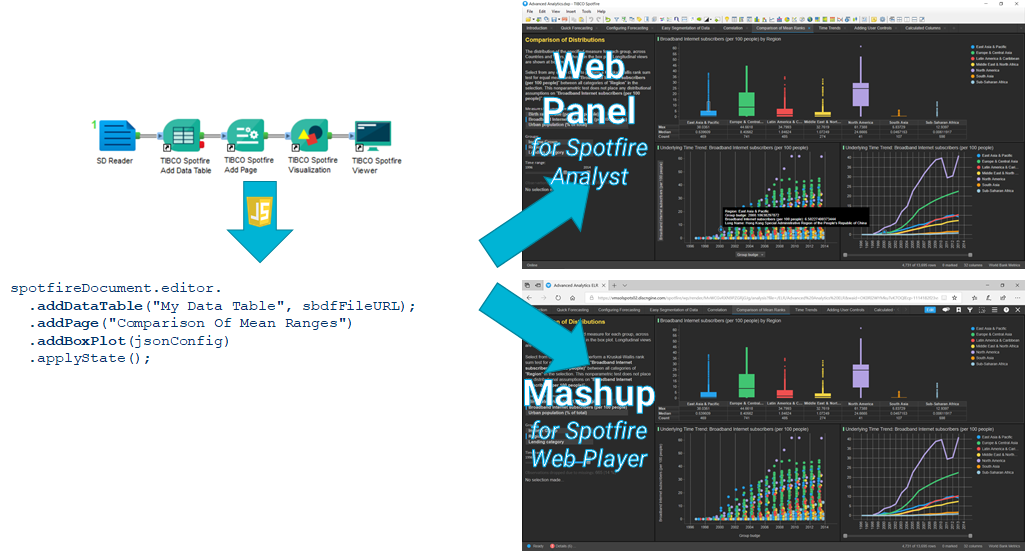

Client Automation

The objective is to allow developers to programmatically interact with the Spotfire full client API through JavaScripting in both Analyst and Web Player clients.

Architecture overview

To integrate the content generated by Pipeline Pilot into TIBCO Spotfire®, the following components are needed:

- the TIBCO Spotfire® Analyst and Web Player extensions: they create the Discngine Web Panel tool.

- the JavaScript API: it allows the communication with the Discngine Web Panel tool.

- the collection of Pipeline Pilot components: it is based on the Reporting collection which will trigger calls to the JavaScript API. The collection also includes the SBDF Writer component (Java based) to transform Pipeline Pilot data flows into a readable advanced text format for TIBCO Spotfire®. Molecules and images are supported.

The Pipeline Pilot collection provides a quick start protocol that will allow you to easily start with the Client Automation collection.

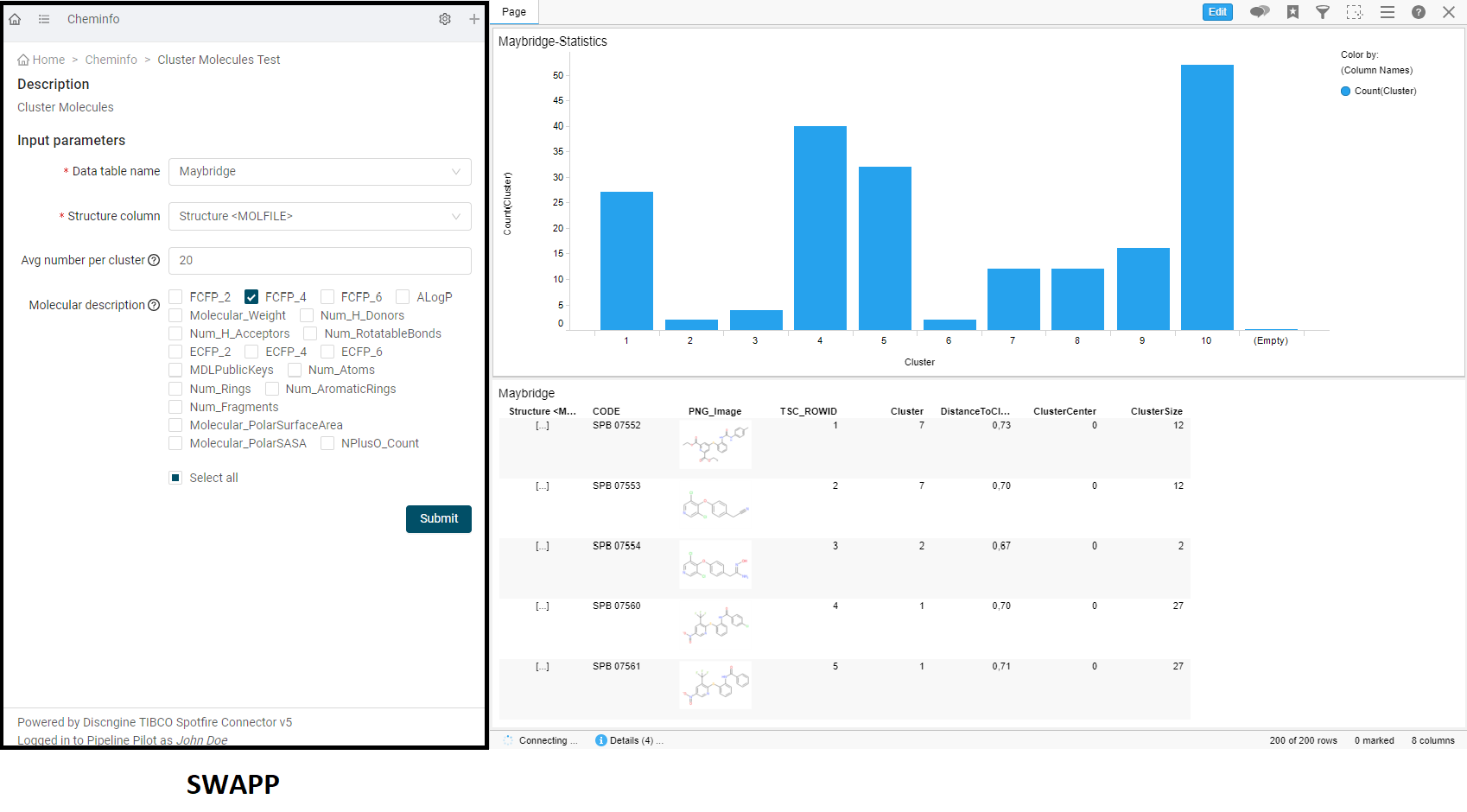

SWAPP

Developed in collaboration with one of the top Pharmaceutical Companies, the SWAPP (Spotfire Web Application for Pipeline Pilot) is a standalone web application designed to be used in the Web Panel. It allows you to register Pipeline Pilot protocols and run them seamlessly as web forms from TIBCO Spotfire® Analyst or Web Player.

This is done in three simple steps.

- First, select which Pipeline Pilot protocol you want to use. When loading the protocol, the SWAPP will suggest the best type of input for each input parameter of the protocol.

- Once registered, you can load the protocol through the menu. The web form will allow you to set the various input parameters based on the registered types.

- After submitting the job, the output HTML will be retrieved and parsed in the page. All javascript instructions generated by the Connector Components will be executed on TIBCO Spotfire®.

You can find more details on how to use the SWAPP in the relevant guide.

Pipeline Pilot Data Functions

Warning: important changes have been made in TIBCO Spotfire® release 10.3 and later on how authorized users trust data functions. We strongly recommend that you read the official documentation and follow the Spotfire guidelines if you are concerned.

Data Functions are initially calculations based on S-PLUS, open-source R, SAS®, MATLAB® scripts, or R scripts running under TIBCO Enterprise Runtime for R for Spotfire, which you can make available in the TIBCO Spotfire® environment. With the Connector, you can base the Data Functions on Pipeline Pilot protocols.

With the Pipeline Pilot Data Functions, you can register TIBCO Spotfire® Data Functions that will execute Pipeline Pilot protocols. The data produced by these protocols can be inserted as a new table, new columns or new rows.

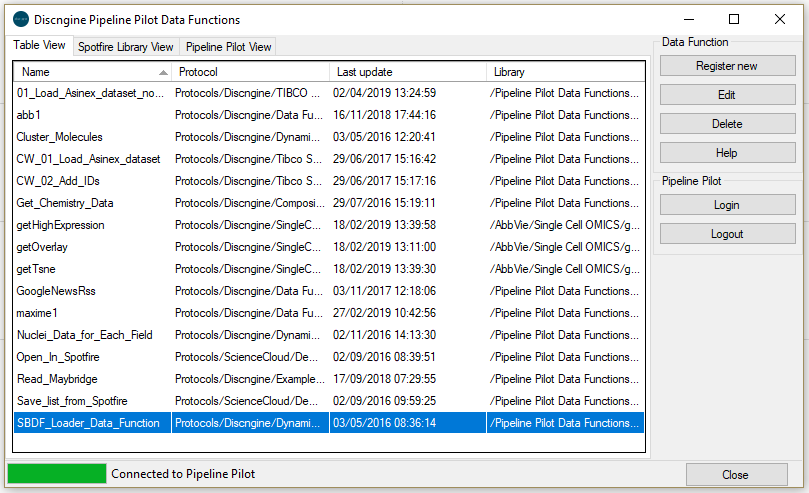

Managing and registering Pipeline Pilot Data Functions

By clicking Pipeline Pilot Data Function… under the Tools menu, the user interface for managing all Pipeline Pilot data functions and registering new ones appears.

From this user interface, you have access to the list of currently registered Data Functions. There are three different tabs, each displaying the Data Functions in a different way.

The Table View simply lists the data functions in a table; the TIBCO Spotfire® Library View uses a tree showing the location of the data functions in the TIBCO Spotfire® library; the Pipeline Pilot View also displays as a tree, this time showing the location of the protocols used by each data function in the Pipeline Pilot server.

To register a new Data Function, or to edit or delete an existing one, you must be connected to the Pipeline Pilot server.

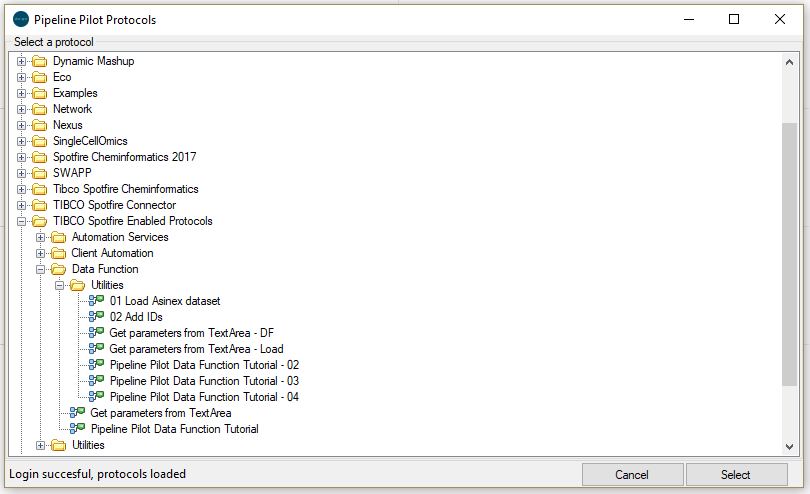

By clicking on Register new, the tool connects to the server and then exposes the available protocols.

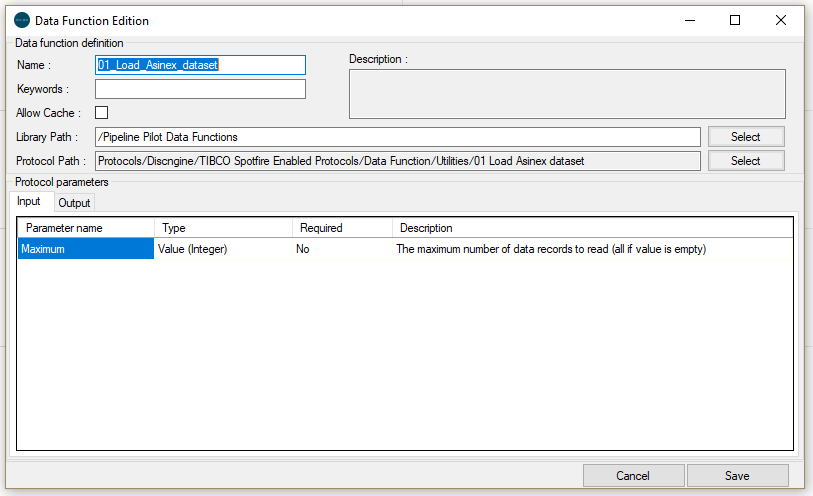

Once a protocol has been selected, the user interface displays the input and output parameters for that protocol. You can change the name of the data function, the keywords, the cache management and the library folder where the data function will be saved.

How to create a Pipeline Pilot protocol for Data Functions

Example protocol:

There are three possible types of input parameters that a Pipeline Pilot protocol might expect:

-

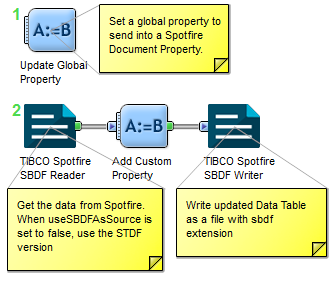

Data Table: if the protocol requires this type of input parameter, a SBDF Reader component needs to be added at the beginning of the protocol and the Source parameter of the component needs to be promoted.

-

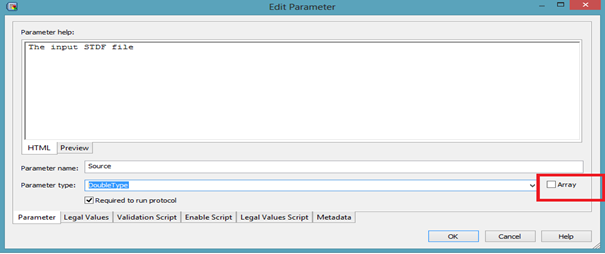

Column Data: if the protocol requires the data from a column, the protocol will need to define an input parameter and select the Array check box of the Edit Parameter interface:

-

Single Value: any protocol input parameter value that is neither a URL type nor an Array type will be considered a single value parameter.

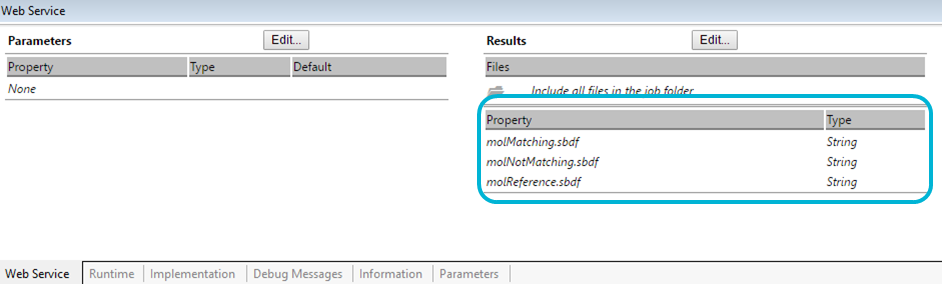

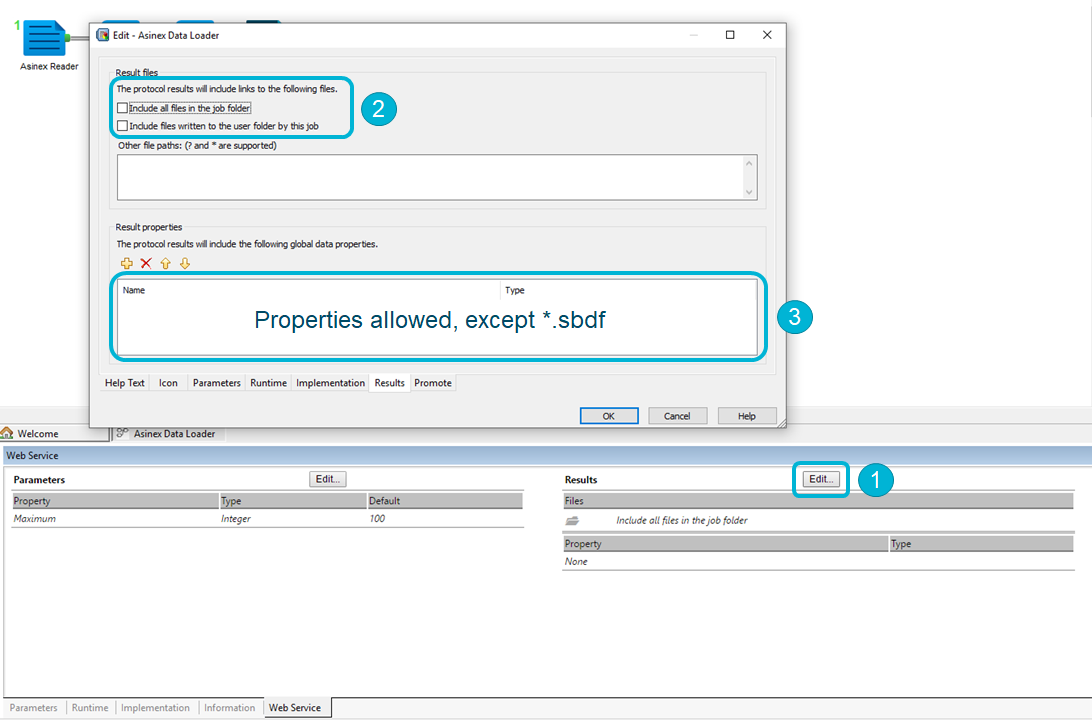

The outputs are read from the Results Web Service part of the protocols:

-

Files: there will be as many data tables as there are results properties with the "*.sbdf" pattern in the protocol. For example, if a property is called test.sbdf, the data function on the Spotfire side will have an output data table called "test". On the Pipeline Pilot side, we expect a TIBCO Spotfire® SBDF Writer component with

$(rundirectory)/test.sbdfas Output File.

When no properties follow this pattern, the first SBDF file present in the run directory will be used.

When Files is set to None (all options are unchecked) there will be no Table output for the Data Function.

If expected files are not found when the Data Function executes, it will fail.

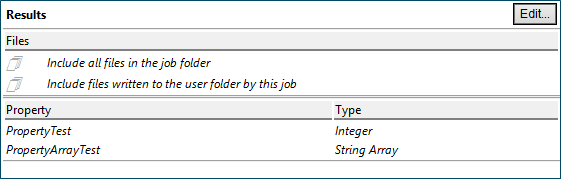

-

Property: they will be sent as Output of the Data Function. When using an Array type the output will be of Column type, otherwise it will be a Value. Byte Array and XMLDocument type are converted to string, avoid using them since they do not exist in TIBCO Spotfire®.

How to use Pipeline Pilot Data Functions

You can use Data Functions in two ways:

-

File > Add Data Tables...: this option allows you to load tables from different sources. One of these sources is a Data Function. If this option is selected, the list of Data Functions available in TIBCO Spotfire® are displayed and then the Pipeline Pilot Data Functions will be available for selection. Selecting one of them will lead to the setting parameter interface so that the Pipeline Pilot protocols can get the right data.

-

Insert > Data Function...: this option allows you to choose one of the available Data Functions, and after setting the input parameters, the output of the Pipeline Pilot Data Function will be loaded as a new Data Table, a new set of columns or a new set of rows. The output form will depend on the user selection.

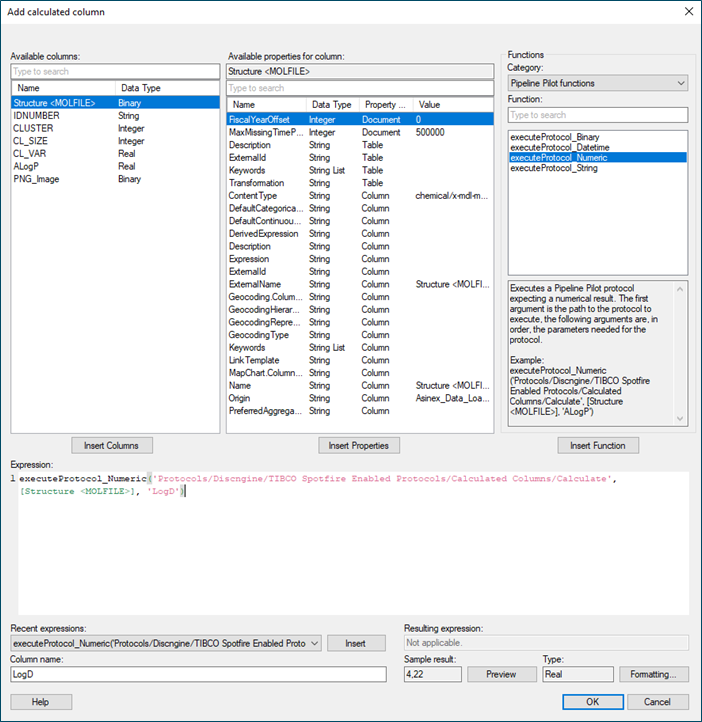

Pipeline Pilot Calculated Columns - beta

In TIBCO Spotfire®

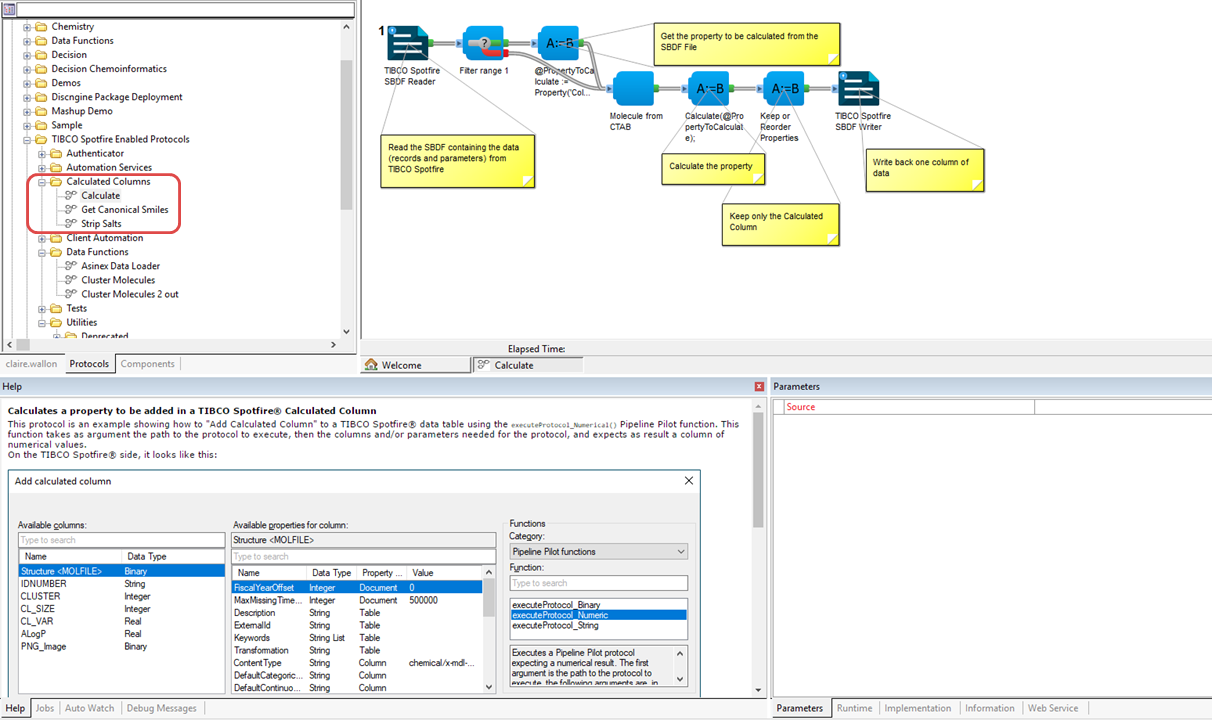

In TIBCO Spotfire® you can "Add calculated columns" to a data table using one of the readily available functions. With the Connector Data Functions module you have access to the "Pipeline Pilot functions" category, containing a set of functions that allow you to call Pipeline Pilot protocols to calculate the column to be added.

These functions take as first argument the protocol path, the following arguments will contain the data and/or parameters to be sent to the protocol. The returned type must be known: for example the protocol called by executeProtocol_Numeric must return a column containing numerical values.

The implementation of Pipeline Pilot Calculated Columns is shared with the Pipeline Pilot Data Functions, so the Pipeline Pilot user session must be set through the Pipeline Pilot Data Functions login panel (View > Pipeline Pilot Login Panel).

In Pipeline Pilot

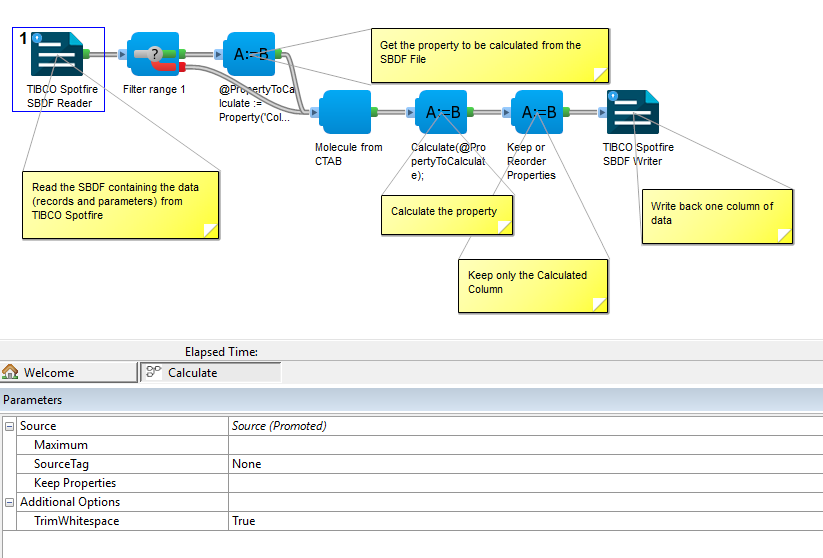

The protocol must at least read an SBDF file containing TIBCO Spotfire® data (records and arguments from the executeProtocol function) and write an SBDF file containing the calculated column to be added to the TIBCO Spotfire® data table. For example:

The Source parameter of the TIBCO Spotfire SBDF Reader component must be promoted, it is the only parameter expected for the protocol. This SBDF will contain one column per argument of the executeProtocol function (except the protocol path), named column[index], the index starting with 0 (column0, column1, column2, ...). The number of lines in the SBDF file will correspond to the number of records in the TIBCO Spotfire® data table.

Then, the protocol must write the SBDF file containing the column to be added, and only this one. This SBDF must be written in the Job Directory and be named out.sbdf.

Note: by default, the TIBCO Spotfire SBDF Writer creates a column containing the molecule, so if this is not the column you want to send, switch off the Write Molecule parameter.

To help you get started with this feature, we provide three example protocols in our Pipeline Pilot collection:

Known limitations

- executeProtocol_Binary: the output mime/type must be the same as the provided column in argument (under investigation with TIBCO Spotfire®).

- If the Pipeline Pilot session is invalid, an error notification will be displayed. You will need to close the "Add calculated column" interface to open the View > Pipeline Pilot Login Panel.

Pipeline Pilot Automation Tasks

Overview

TIBCO Spotfire® Automation Services is a web service for automatically executing multi-step jobs within your TIBCO Spotfire® environment. See the official documentation for more details.

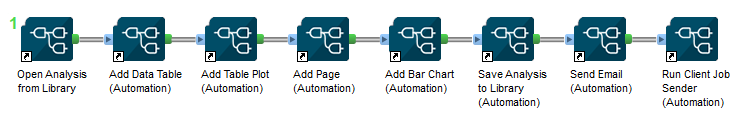

Briefly, a TIBCO Spotfire® Automation Services job file must be created, describing a set of pre-defined tasks. The job can then either be executed immediately or scheduled to be executed periodically using the TIBCO Spotfire® Client Job Sender.

How to create and run a job

Example protocol:

As in the TIBCO Spotfire® user interface, a job must always start with opening a document. The task components then follow one another, to finish with the "Run Client Job Sender" component which will write the XML task file and launch the Client Job Sender tool.